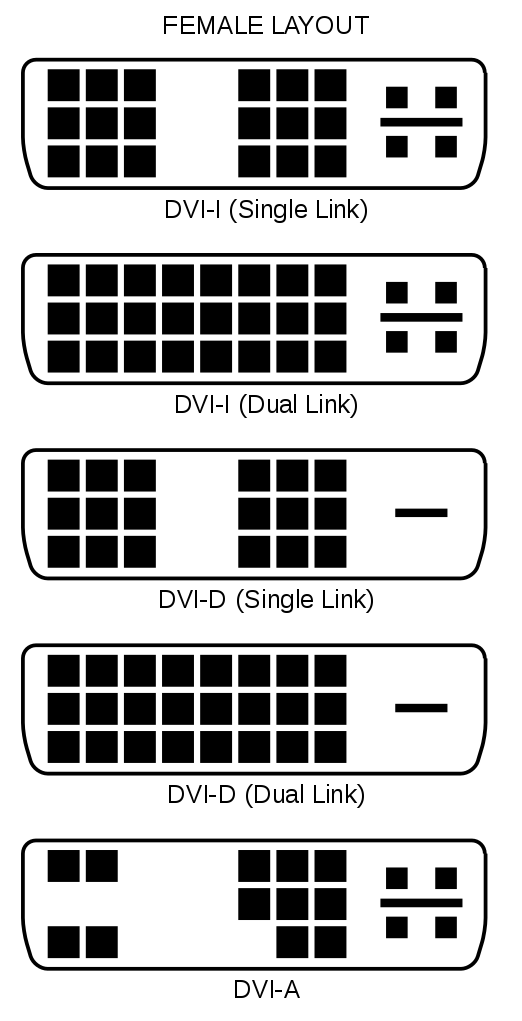

Most current NVIDIA based graphics cards feature a Digital Video Interface (DVI) connector for connecting a digital flat panel LCD monitor or projector to the card. A DVI connector is characteristically colored white (as opposed to a VGA connector which is colored blue). For every graphics card that features a DVI connector, the number of pins and layout of the pins on the DVI connector will vary depending on what type of DVI connector is found on the graphics card.

A DVI-D connector on a graphics card sends out a digital signal only, while a DVI-I connector, which carries both an analog and digital signal, can send out a digital signal (for digital displays such as flat panel LCD monitors) as well as analog signal (for older displays such as a CRT monitor) using a DVI to VGA adaptor.

NVIDIA based graphics cards which carry a DVI-I connector are fully compatible with flat panel LCD monitors which typically have DVI-D cables. The DVI-D cable will only read the digital signal from the DVI-I connector on the graphics card and ignore the analog signal. If your NVIDIA based graphics card features two video out connectors (ie VGA + DVI), it does not necessarily mean that it will support dual monitors at the same time. Some NVIDIA based graphics cards will feature a DVI-D connector as well as a VGA connector. These cards generally will only support a single display at a time, not dual monitors. The reason for having two different type of connectors is to allow you to connect either an analog or a digital display to your PC.

Beginning with the Pascal architecture, Nvidia cards will no longer support analog display connections. This includes DVI-I as well as VGA. Current supported protocols are:

DVI-D

HDMI

DisplayPort